For a moment, let's forget the question and look at a totally different question – what does your desktop look like? Stop reading this for a moment, minimize all windows and have a look at your desktop.

You would see a set of icons (files) right?

Ok, let's say you repeat this experiment sometime later, say a month from now? What would it look like? A similar set of files?

Ok, now how different is it from your current desktop?

If I am right, there is a very strong probability that your desktop reflects your current interests. If you use your computer to listen to songs, then you see some directories containing songs, may be a bunch of players, etc. And if you are a game buff, you will probably see a list of shortcuts to games.

What I am getting to is that the desktop is contextual and at any time I can somewhat determine how you use your system by just looking at your desktop. Although in terms of implementation, the desktop is just another folder, the way users use it is quite different.

Once in a while you spend time re-organizing things, moving things away from your cluttered desktop (and this happens just like in real life 🙂 ). Why do you do that? The reasons for this is not just that your desktop is cluttered, but also that your interests have changed over time.

One more petty observation. If you are in the habit of using more than one system, you will observe that the desktop reflects different things in different systems.

Ok now to the question of a semantic desktop. My idea of a semantic desktop is that of an intelligent desktop that knows what you are currently interested in, shows you what you like at the moment and silently archives things from your desktop (atleast move it away from the 'desktop') as your interests change and it sees that there are irrelevant things out there.

It might also contain information relating to the latest music if you are a music buff, or may be some game that you might be interested in playing if you are interested in games.

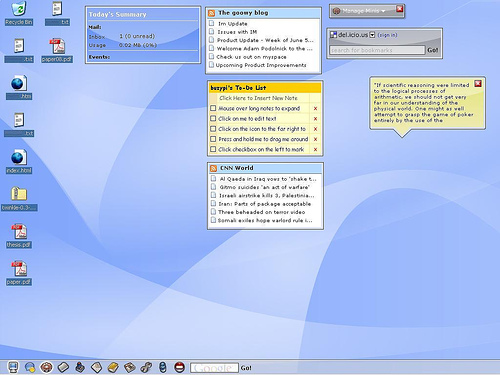

You might now wonder, how different it is from widgets that we use on our desktops? Well, there are some differences.

Widgets are 'floating' on top of your desktop. In other words they don't form part of your desktop and I see it like a work-around to not having support from Operating Systems (or the Desktop Environment) for a true semantic desktop.

My idea of a semantic desktop is that of a 'dynamically changing background'. The background image should also have other information embedded in it and this should be real-time. The information could be your contacts, local news, a music player, what not!

The most relevant thing I can think of right now is setting some 'start-pages' as your desktop background. Start pages could be something like Google IG or My Yahoo, My MSN or say Goowy.

Of course, we are not even close to what I see as a real semantic desktop. But I guess we are making some major advancements in this direction and the day is not far-off when I will see my information totally organized and I see the realization of 'information-on-demand'.

So to wind up, how can this happen? Being a semantic web enthusiast, I will naturally expect semantic web to solve the problem of providing data and applying views on data stores. Then there is a need for operating systems (or the desktop environment) to provide support for such an environment. For example, the synchronization interval in the case of the example above is 1 day! That is not even close to 'real-time'. The widgets that we see today should not be floating around, but should be truly embedded into the desktop and should prop up if we need to interact with it, but otherwise stay there just as a provider of information.

Now someone may say that widgets can be configured to not float around. For example, Yahoo Widgets has this option of Konspose mode or moving the widgets to a lower layer.

I however feel there are vital differences between widgets and semantic desktops.

1. I expect the widgets not to interfere with the rest of my icons. So an “Auto arrange” should arrange the icons and widgets in such a way that they don't overlap.

2. A double click on a widget should activate the widget and I should be able to interact with it. Clicking on an empty space should embed the widget back into the desktop.

3. I should be able to resize, minimize/restore widgets at will. Each widget should have a set of configurable properties that I can set through a right-click.

This clearly indicates that Widgets require more support from the Operating System (or the Desktop Environment).

Let me be optimistic and expect some support soon.