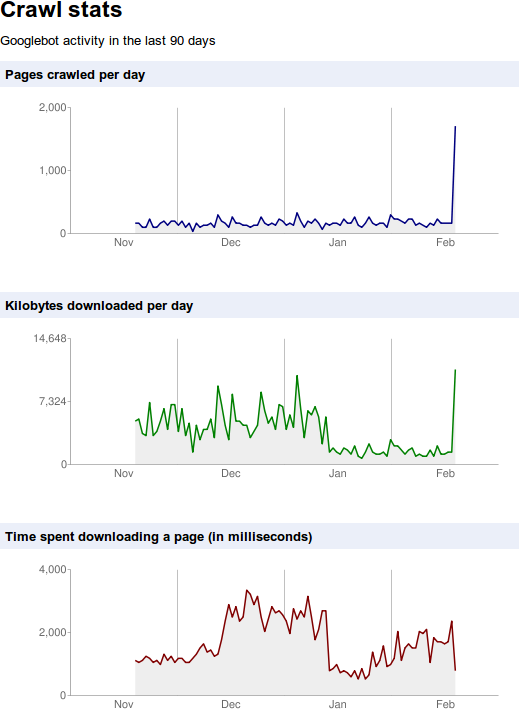

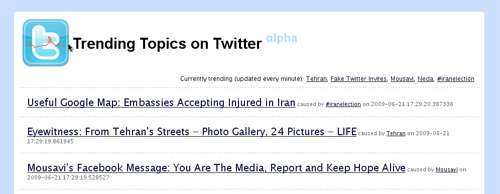

Last June, when I built the Twitter Trending Topics app using Google AppEngine, I had mentioned quite a few issues with the application building in Google AppEngine. After giving it about 9 months to mature, I thought I will take a look at it again with a fresh perspective on where it stands.

The first thing that I wanted to try was to revive my old application. The application has been inactive because it has surpassed the total stored data quota and I never managed to find time to revive it.

One of the biggest issues that I mentioned last time, was the ability to not be able to delete data from the application easily. There is an upper limit of 1GB on the total stored data. Considering that the data is schema-less (which means that you need more space to store the same data when compared to Relational Databases), this upper limit is severely restrictive when compared to the other quota limits that are imposed. There were about 800,000 entries of a single kind (equivalent of tables) that I had to delete!

So I started looking for ways to delete all the data available and came across this post. I decided to go with the approach mentioned here. The approach still seems to be to delete data in chunks and there is no simple way out. The maximum number of entries allowed in a fetch call is 500, which means I require 1600 calls to delete all the data.

Anyway, so I wrote a simple script as mentioned in the post above and executed it. I experimented with various chunk values and saw that 300 was the size that worked optimally; anything more either seemed to take a lot of time or actually timed out.

Here is the code that I executed:

from google.appengine.ext import db

from <store> import <kind>

def delete_all():

i = 0

while True:

db.delete(<kind>.all().fetch(300))

i = i + 1

print i

saved this file as purger.py and executed it as:

$ python appengine_console.py twitter-trending-topics

App Engine interactive console for twitter-trending-topics

>>> import purger

>>> purger.delete_all()

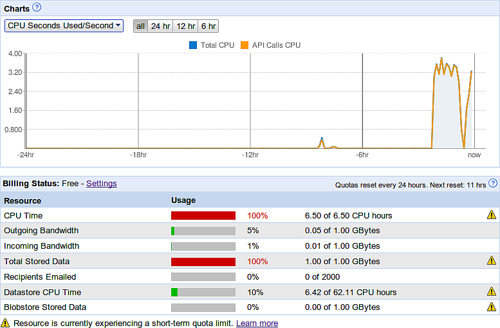

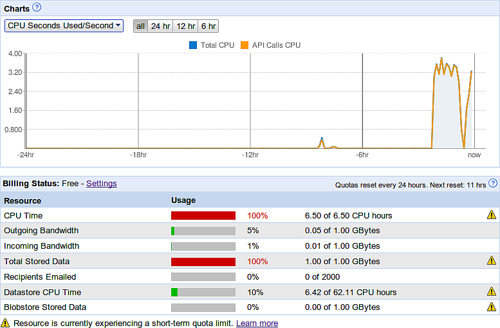

A seemingly simple script, but after about a couple of hours of execution (after having deleted roughly 200,000 entries), I started seeing a 503 Service Unavailable exception. I thought this was to do with some network issues, but realized soon that this was not the case. I had run out of my CPU time quota!

To delete 200,000 entries the engine had taken up 6.5 CPU hours and this it managed to do in less than 2 hours! It had, according to the graphs, assigned 4 CPU cores to the task and executed my task in the 2 hours. At this rate, it will take me 4 days to just delete the data from my application. The Datastore CPU time quota is 62.11 hours but there is an upper cap of 6.5 hours on Total CPU time quota – the Datastore CPU Time quota is not considered separate. I am not sure how this works!

[ad name=”blog-post-ad-wide”]

As seen in the screenshot above, the script executed for about 2 hours before running out of CPU. There was no other appreciable CPU usage in the last 24 hours. Considering that there was no other task taking up CPU, the 6.42 hours of Datastore CPU time seems to be included in the 6.5 hours of Total CPU time. So how am I supposed to utilize the rest of the 55 hours of Datastore CPU time?

I am not sure if I am doing something wrong but considering that there are no better ways of doing things here are my observations:

- It is easy to get data into the system

- It is not easy to query the data (there is an upper limit of 500 and considering that joins are done in code, this is severely restrictive).

- There is a total storage limit of 1GB for the free account

- It is not easy to purge entities – the simplest way to delete data is to delete them in chunks

- Deleting data is highly CPU intensive – and you can run out of CPU quota fairly quickly.

So what kind of applications can we build that is neither IO intensive nor CPU intensive? What is Google’s strategy here? Am I missing something? Is anything wrong with my analysis?